In the animation industry, 3D modelers typically rely on front and back non-overlapped

concept designs to guide the 3D modeling of anime characters. However, there is currently a

lack of automated approaches for generating anime characters directly from these 2D designs.

In light of this, we explore a novel task of reconstructing anime characters from

non-overlapped views. This presents two main challenges: existing multi-view approaches

cannot be directly applied due to the absence of overlapping regions, and there is a

scarcity of full-body anime character data and standard benchmarks. To bridge the gap, we

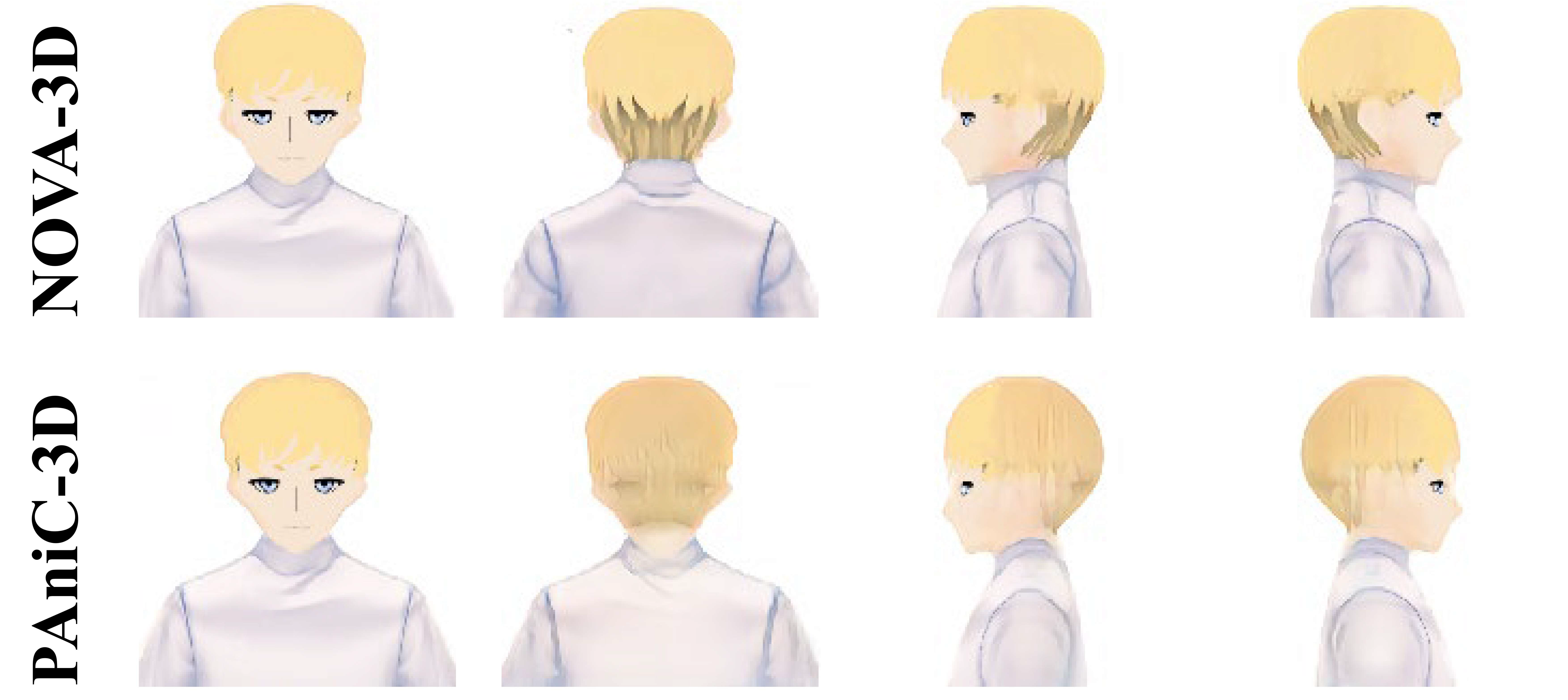

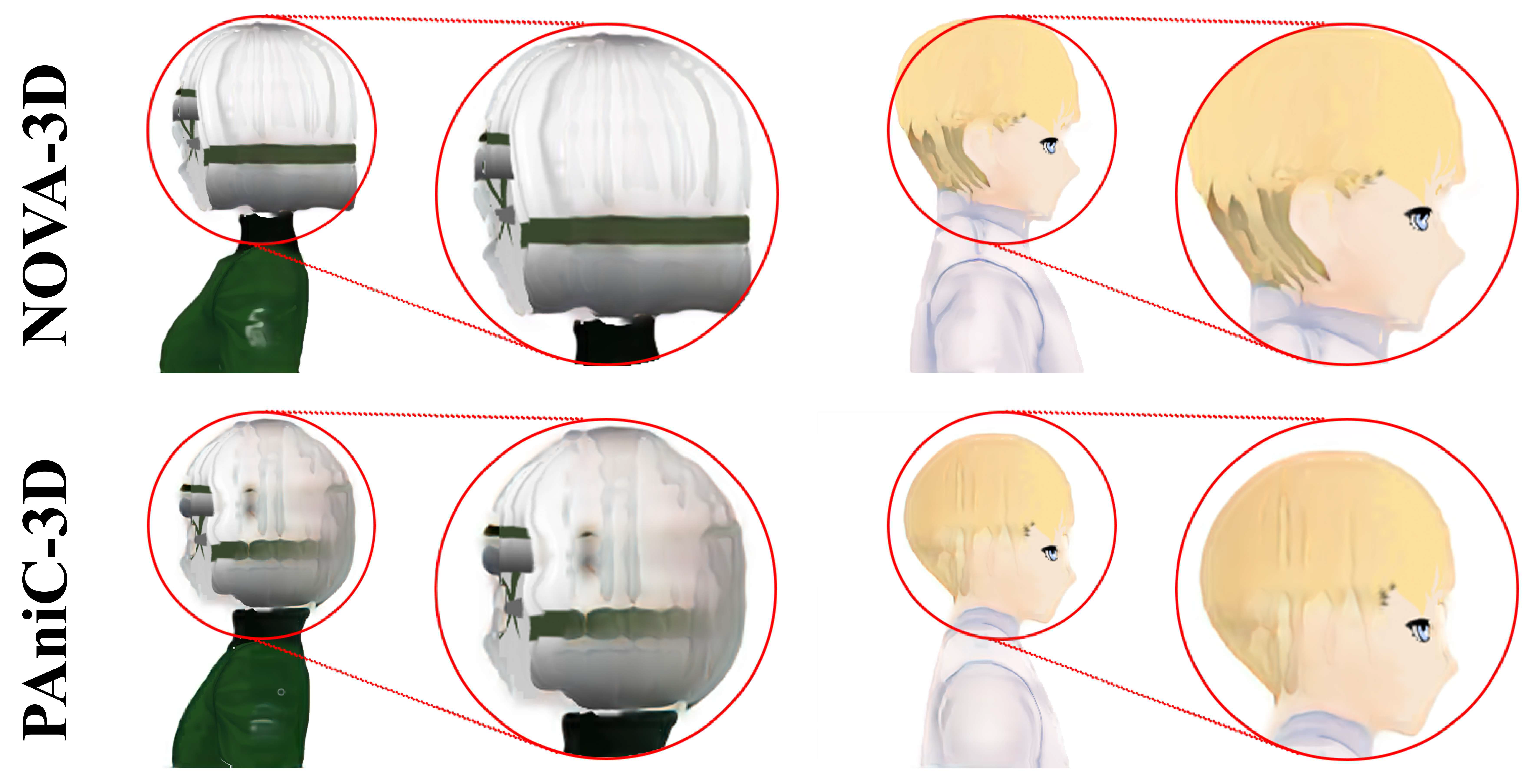

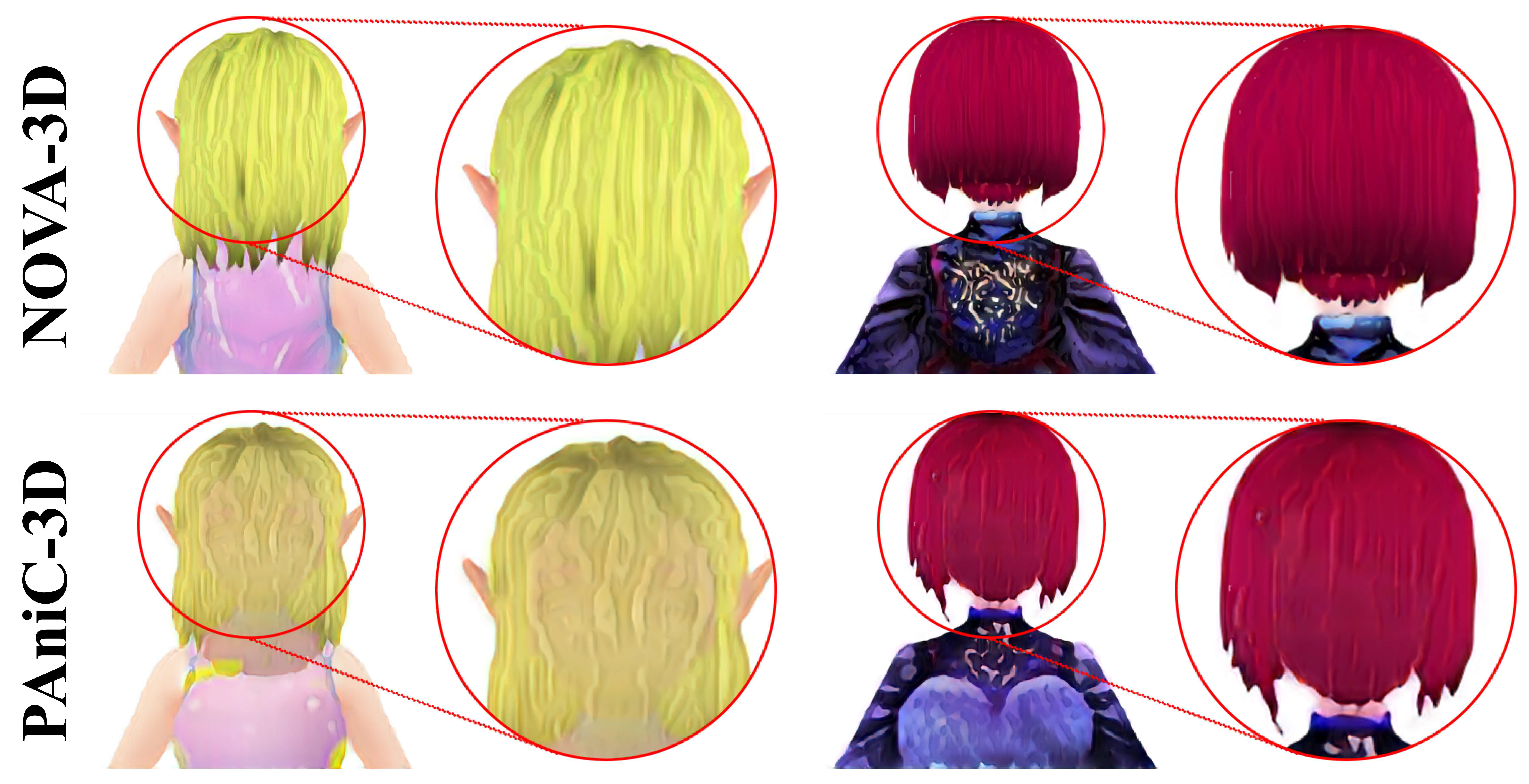

present Non-Overlapped Views for 3D Anime Character

Reconstruction (NOVA-3D), a new framework that implements a method for view-aware feature

fusion to learn 3D-consistent features effectively and synthesizes full-body anime

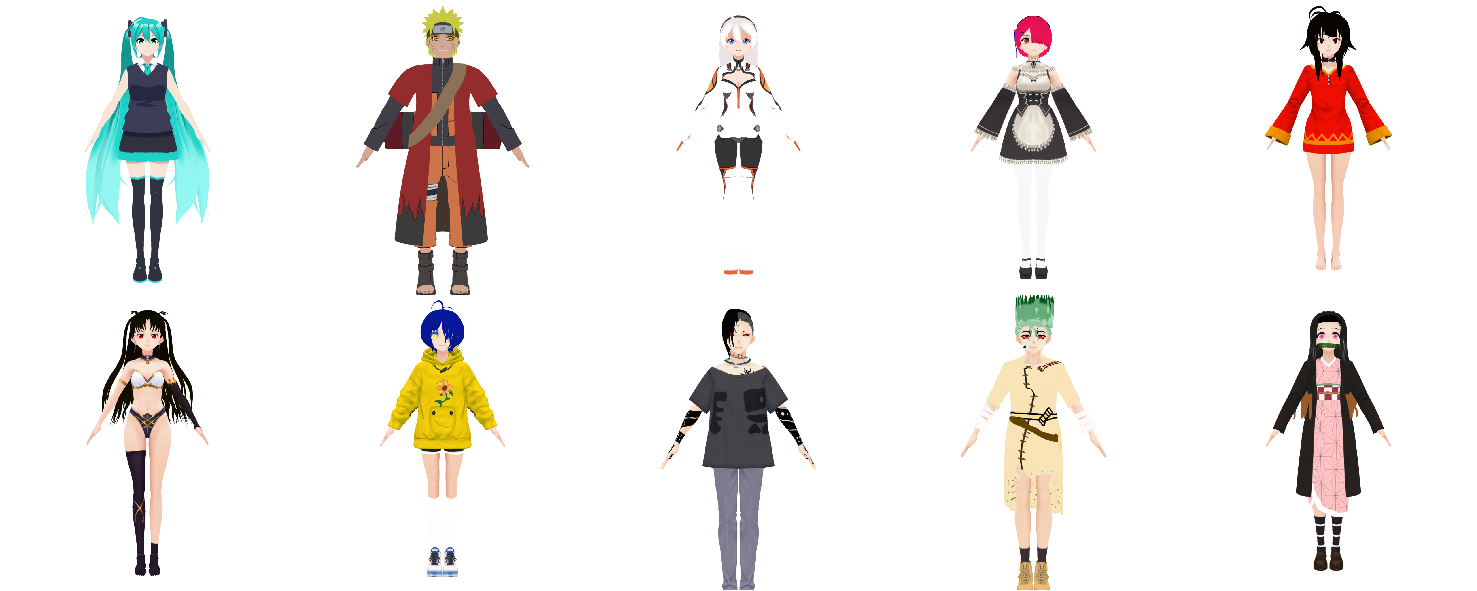

characters from non-overlapped front and back views directly. To facilitate this line of

research, we collected the NOVA-Human dataset, which comprises multi-view images and

accurate camera parameters for 3D anime characters. Extensive experiments demonstrate that

the proposed method outperforms baseline approaches, achieving superior reconstruction of

anime characters with exceptional detail fidelity. In addition, to further verify the

effectiveness of our method, we applied it to the animation head reconstruction task and

improved the state-of-the-art baseline to 94.453 in SSIM, 7.726 in LPIPS, and 19.575 in PSNR

on average.